The recent history of technology in 30 words or less: Approaches needed to solve old and new problems; IT departments and vendors try to do more with fewer resources; and one, undeniable reality: every company is a data company.

And at the heart of all of this? One of bipp’s favorite things: data models. But we’re not talking about your father’s data model. The truth is, there’s more to modern data modeling than meets the eye, especially if your eye is on the future.

Let’s begin - as they say - at the beginning. From my point of view, data modeling bridges the technical and business worlds. A data model must both support and enable business processes and decisions. And building a data model requires both technical skills and an understanding of how your broader business functions.

That Was Then

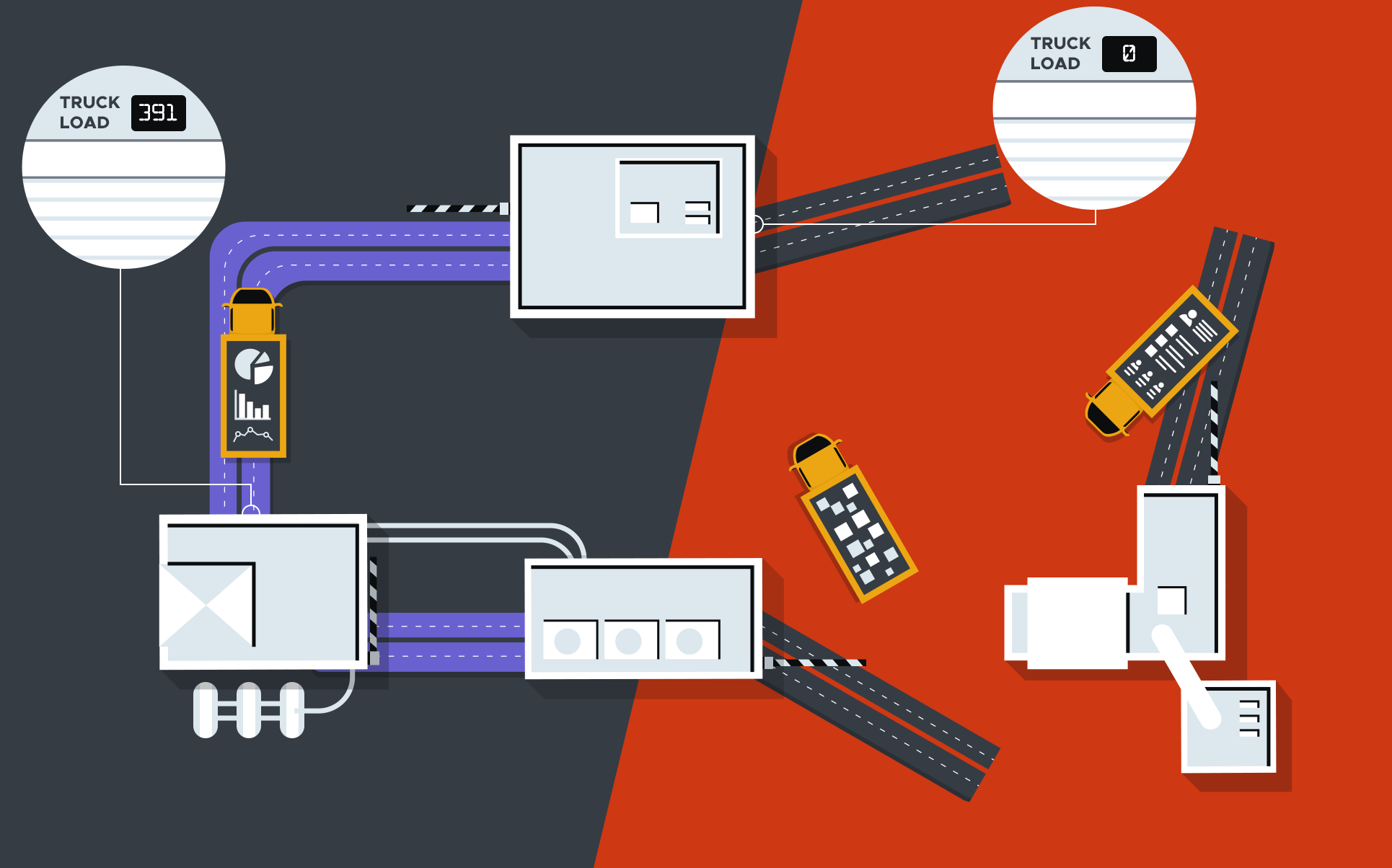

When we talk about the origins of data modeling, we’re talking about building a model for every data or analysis request from business users. In the early days, the IT department was responsible for all the data loading, handling, and modeling. As a result, data analysts could only use pre-defined models to create reports and analyses using tools such as Cognos.

This was incredibly inefficient as all the changes to models and data involved very detailed requests to the IT department. In addition, this was a time-consuming and error-prone approach. In time, the shortcomings became too limiting, and the technology improved, with the data modeling ‘power’ shifting to BI or data analysts.

Though an improvement, this approach still saw queries being written over and over again. This was still very time-consuming, and success often relied on the analysts’ experience and history with their company. For example, particular metrics may be defined differently by the company overall and its marketing department. If the analyst didn’t know about this quirk, this could lead to wrong analyses and force multiple iterations of the same request (until they got it right).

Analysts using older, legacy data modeling software didn’t always create the models themselves and needed to request new data and models from their data engineers. This could take days or even weeks. So much for every company being a data company!

Old-fashioned approaches to data modeling also typically meant the data knowledge was found in one person’s notebook. As a result, knowledge was unstructured and not systematized - and not shared across the organization. This added another worrying variable - the analyst’s concentration levels - which meant the same analysis could provide different numbers in different iterations. And if the analyst left? The ‘notebook’ was gone, and everything fell apart.

Pressure was also growing for business users to make their own reports, dashboards, and analyses. So, they were provided with direct access to data using more visual software. But the trade-off was that this simplified approach made data modeling inflexible. And every new report still required building a new data model.

And, as is the way of competing software companies, the early tools were somewhat hermetic, which meant there was little transferable knowledge between them. As a result, complex, tool-specific language was frequently required to use tools in a more advanced way.

This is Now

This brings us to today. Modern data modeling is everything traditional modeling isn’t. First of all, it adds the data modeling layer. This is the analysts’ notebook we were talking about. But this time, it’s a notebook that’s shared across the organization. The data modeling layer sits on top of the database, containing logic to map everything from business rules and definitions to data storage rules.

Second, modern data modeling uses data modeling languages. They are usually based on SQL, which flattens the learning curve for BI and data analysts. With this modern approach to data modeling, the data model only needs to be defined once and can be used an infinite number of times.

This also means that the data experts define the logic behind business metrics at a model level. This way, all other users will use the same data with the same definitions, ensuring comparability and uniformity.

This benefits business users and the interfaces remain simple; using them doesn’t require any high-level technical knowledge. They can manage dashboards using drop-down menus and point-and-click GUIs.

The Tao of bipp Data Modeling

We designed the bipp BI tool for BI and data analysts from day one. Data modeling is at the core of our proposition. Our data modeling language uses SQL syntax, and it was developed with simplicity in mind. That’s why only basic SQL knowledge is required to master it. Our language connects to all the most used databases and allows you to create reusable data models.

bipp’s auto-SQL generator rids the business users of the need to write any SQL code. Instead, the auto-SQL generator uses joins defined at the model level to create the SQL code in the background. This lets business users run ad hoc reports in a true self-service BI environment.

The bipp approach to data modeling also includes Git version control. Its purpose is to track changes in your project, ensuring you always use the correct version of your data. Git enables a business-wide single source of truth as the data is updated in all the files connected with the original file.

Instead of only following the current trends in data modeling, we’re also trying to set new trends. And the future is the visual data modeling layer.

The Future - Visual Data Modeling Layer

Having both a data modeling language and visual modeling capabilities is the latest data and analytics trend. This approach ensures a flexible tool for the technically skilled and democratization of data for less technical people throughout an organization.

But the future will definitely be built with visual data modeling. With the power of SQL expressions and innovative visual capabilities, the complex structure of your data can be modeled visually. For example, arbitrary join conditions, one-to-many and many-to-many join relationships, dynamic window aggregations, or LODs can all be managed with a point and click interface without learning a new language.

bipp’s GUI-based data model layer lets business users define all the model details simply. For example, LOD columns and the ability to define custom metrics, parameters, and join relationships —virtually everything that would have been done in the past by writing data modeling language code.

Our goal is the ultimate data democratization and removing all the bottlenecks in accessing the data. Or, in other words, help data engineers use the stuff they already know - SQL - to empower business users to explore data on their own.

Summary

Some would say there are no wrong approaches to data modeling in BI; we at bipp disagree. Legacy platforms require you to move your data to gain insight from them, and we’re firmly against it. Current approaches allow us to maintain the balance between data democratization and the complexity of data modeling.

We firmly believe visual data modeling is the future - putting the power of data modeling in everyone’s hands - and helping every company become a data company.

If you want to check out which approach works best for you and your team, sign up for a demo.